Work behind the paper: Quantitative Comparison Between A Traditional User Interface (GUI) and A Perceptual User Interface (PUI)

Self-directed research project at IIAD, Delhi

This article is an update to the original article that I had published on Medium in 2021.

During an academic project in my third year at IIAD, Shyam Attreya discussed how metropolitan cities in India such as New Delhi witness long queues at places like hospitals & airports. This is primarily because certain processes, for example checking in at an airport, are conducted by a limited number of personnel for a crowd much larger than what they can efficiently handle.

Image source: India Today.

A similar case that I observed was at Max Hospital, Delhi. Upon entering the OPD, people would rush to the

reception desk in panic to ask questions that resulted in repetitive answers by the hospital staff.

I wondered whether these repetitive communication patterns could possibly be automated to increase

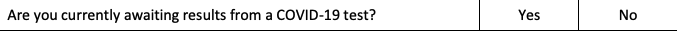

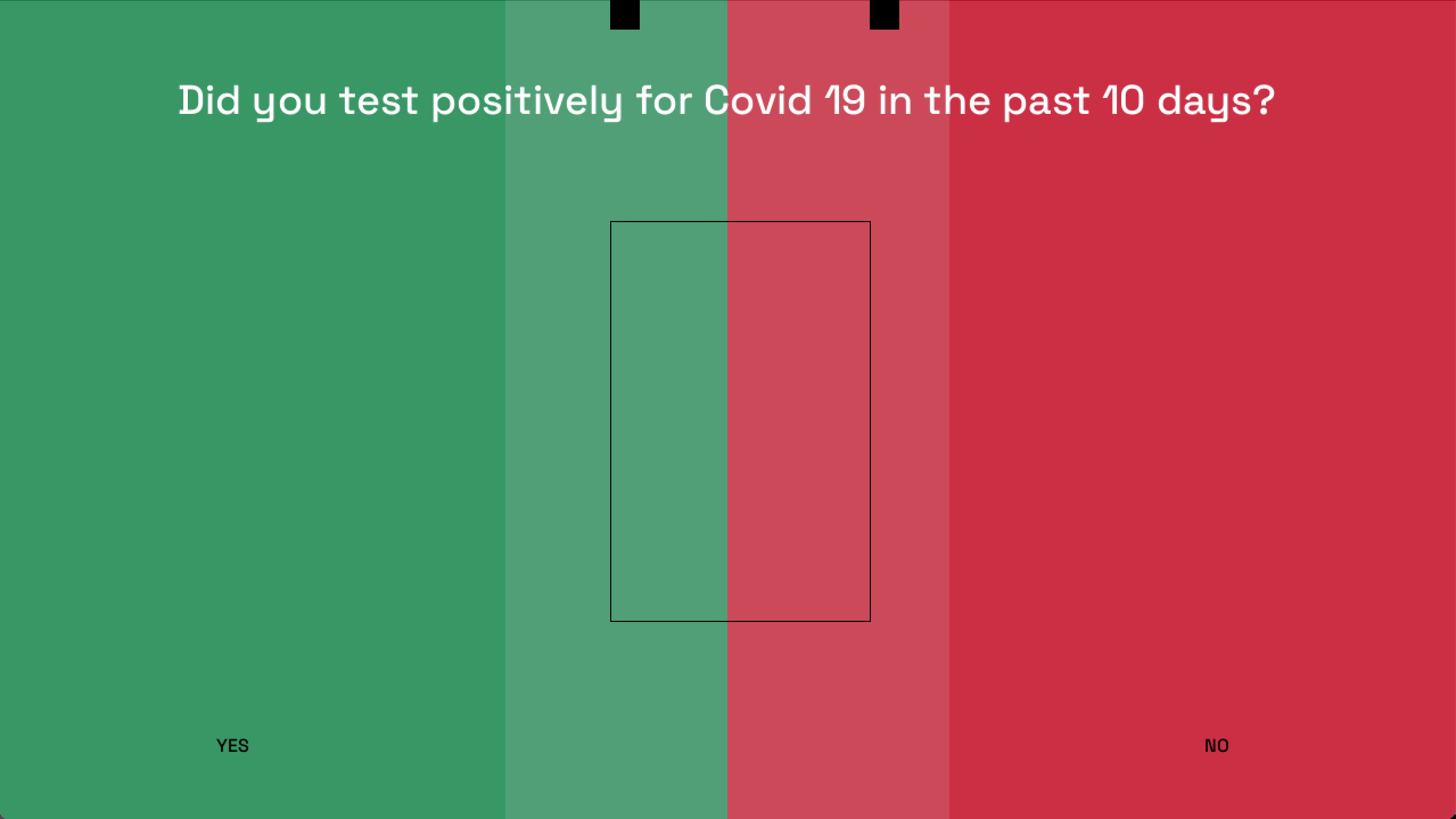

efficiency. For example, during COVID-19, healthcare workers asked questions with binary answer

possibilities to ascertain whether the individual is safe or at risk.

A question from the OSHA Sample Employee COVID-19 Health Screening Questionnaire.

It struck me that processes such as the one above, that attempt to get user-response to close-ended questions, could be processed much faster with a simple digital intervention.

In order to maximise efficiency with minimal contact, I decided to break away from the traditional desktop paradigm (of a display interacted via a keyboard & mouse). A visual interface that could be interacted via gestural inputs seemed to be quite desirable.

At the time, I had no knowledge of creating a gesture recognition program. However, I had been exploring the capabilities of the webcam on my personal computer by making tiny experiments on Processing.

An experiment that leverages Daniel Shiffman's blob-tracking algorithm. This was later converted into an object-driven EBook reader.

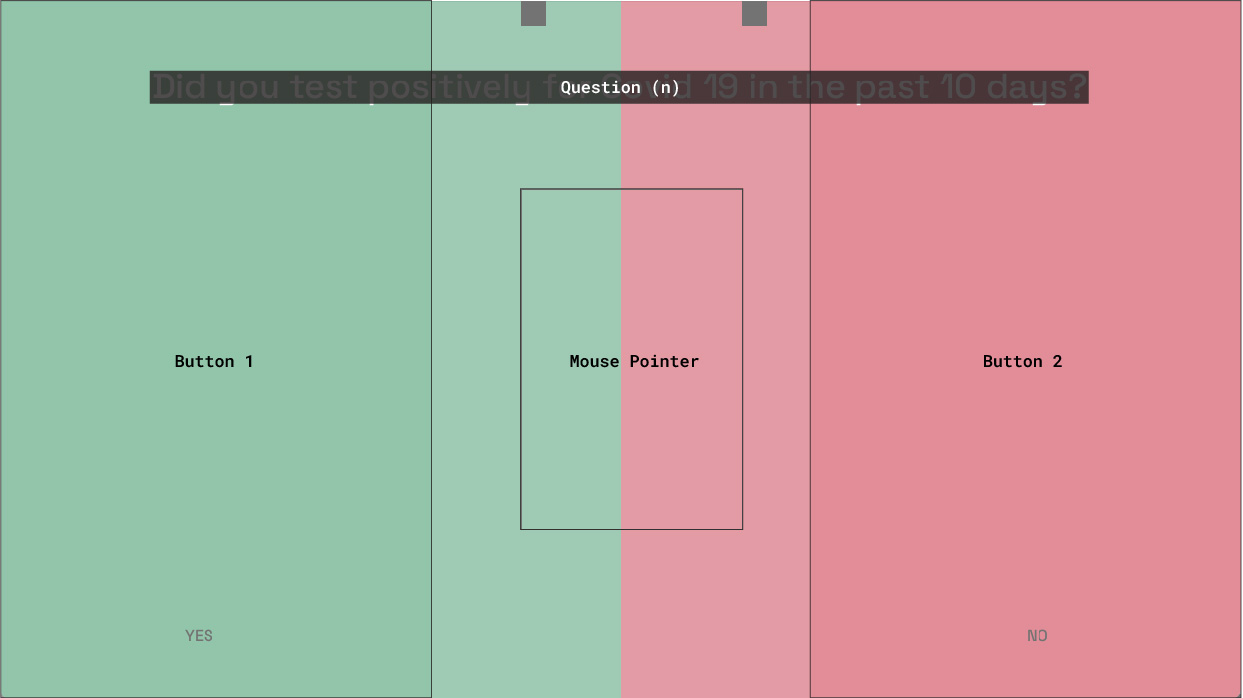

It struck me that for an interface that needs input for binary answer possibilities, I don’t necessarily require a gesture recognition program. A simple interface that allows a person to communicate their choice between Option A & Option B is enough. To achieve this, I decided to use the head as a replacement for the mouse, with answer possibilities placed on either side of the screen. A simple head tilt was enough to input the desired answer.

A small demonstration of the interface explained above. Programmed on Processing.

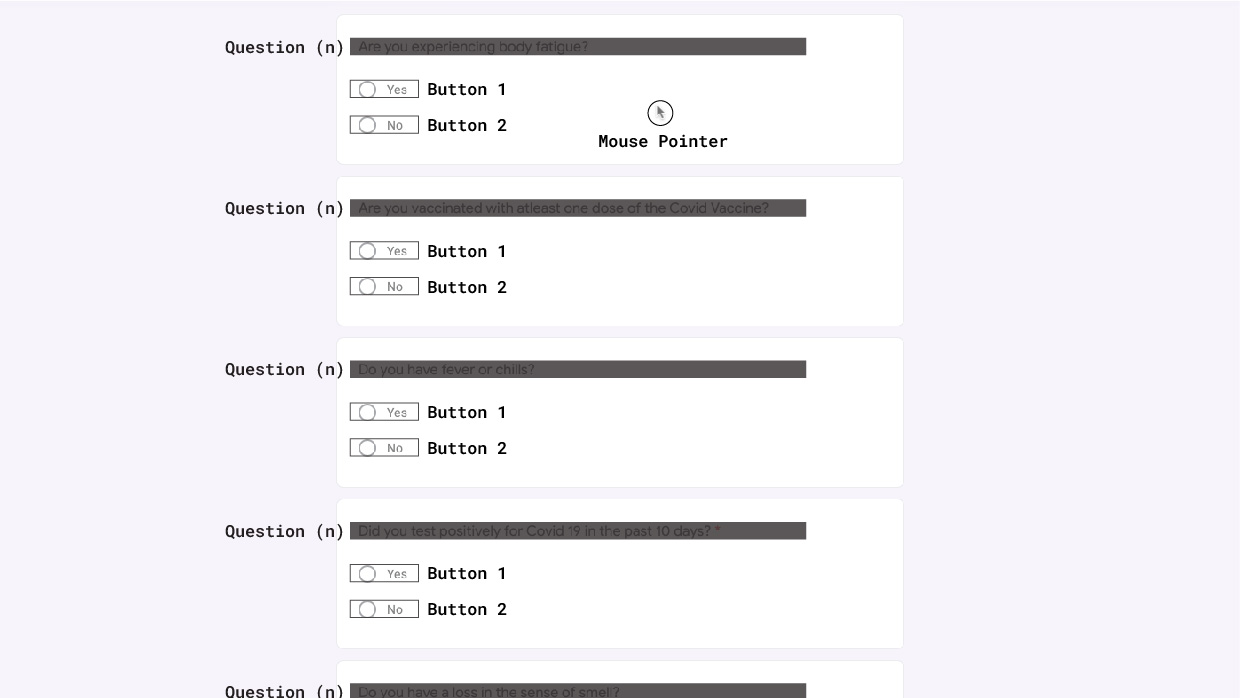

I then refined the design of the interface a little bit. This was done to improve readability and distinguishability. No other additional gimmicks that could enhance usability were considered, to keep the comparison restricted to the efficiency of input modalities. To compare this interface with a traditional one (GUI), I used a Google Forms form which contained the same COVID-screening questions as in the perceptual interface, sourced from OSHA.

The final interface.

A breakdown of the elements of the head-tracked interface.

In comparison, this is a breakdown of the Google Forms interface for the said test.

During my literature review on the development & testing of perceptual interfaces, I found it fascinating

that there was no quantitative data on how much better a perceptual interface could be, when compared to a

graphical one. However, the general consensus in the world seemed to be that perceptual interfaces are

better, faster & easier to use. But by how much?

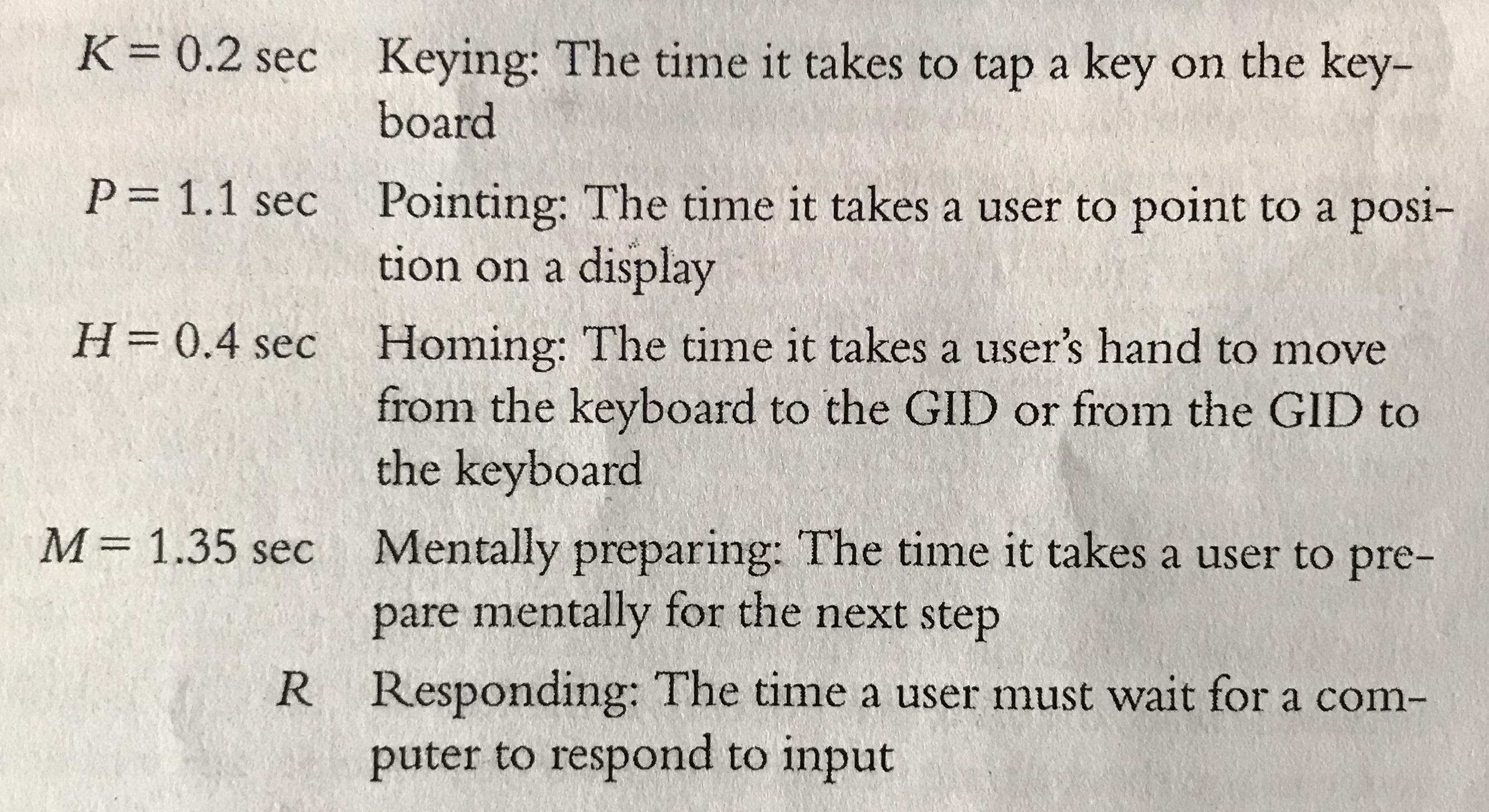

I decided to put both interfaces against each other and to compare them to ascertain the time taken to

perform a task (in this case, to answer some of the OSHA COVID screening questions). To record data, I

adapted the GOMS Keystroke Level model found in The Humane Interface, by Jef Raskin. The model suggests that

the total time taken to complete a task on a computer system is the sum of elementary gestures that the task

comprises (Raskin). This made it simple to break down interaction on an interface into an equation of time

based on a simple mnemonic system (shown below).

Source: The Humane Interface, by Jef Raskin.

I made certain adjustments to the model to suit the nature of human-computer interaction with a perceptual modality. I then proceeded to conduct one-on-one tests in a moderated environment. The test had two phases. In the first, participants were asked to enter their answers using the head-tracked interface and in the second, they filled out a Google Forms form with a trackpad.

An interaction comparison of the same action (Entering input + scrolling/entering to neutral state for the next question).

For the analysis, I broke down actions for Question 1 and Question 3 for all participants. Here is what I found:

Decrease In Input Time

There was a ~0.5s decrease in input time, post comprehension of the question shown, in favour of the head-tracked interface. Participants shaved off a little more than half a second to enter their answer for a single question, meaning that in scenarios where time to complete a task is an essential factor (such as screening in a long queue), perceptual interfaces are more efficient.

Less User Effort

On average, a user performed ~1.625 lesser steps on the head-tracked interface. These 'steps' could include the removal of their fingers from the trackpad after scrolling and other trackpad gestures that arise before reading the next question on the Google Forms form. The amount of user effort / energy expended was impossible to measure for me.

Ease of Use

By using follow up questions, it was found that 100% of the participants found the head-tracked interface easier to use. However, as pointed out by Atreyo & Alina (two of the participants), using the trackpad felt more natural to them as they were used to/accustomed to using trackpads on a daily basis. This presents an interesting hypothesis for the future: how much of an impact does digital literacy have in

the interface effectiveness of a perceptual and traditional interface?

With these three findings, it can be empirically concluded as to how much better a perceptual user interface is when compared to a traditional one in a specific setting. However, in this experiment, the error rate was 0% which is definitely not realistic for a computer vision based interface operating in a complex social environment. A follow-up to this research could be to look at a similar testing model in complex social environments, with different kinds of people using the interface.

My major reflection through this project is better articulated by Neri Oxman in her essay, Age of

Entanglement. As interface and experience designers, we often tend to differentiate between domains of

engineering and design forgetting that the confluence of them can be so much more powerful. Working in

isolation to push technology on one side and design on the other is, in my opinion, a delimiter of potential

power.

Through the activity of writing a 4000-word paper, I found great confidence in the taught ability to

assimilate large amounts of information, analyse and critically dissect patterns which can then be

articulated through specific mediums. The ingraining of the Double-Diamond model, first introduced in my

first year, has found its way into everything I do and it was the same for this project as well. I observed

peers get lost in the sea of information and when they asked me for help, my solutions often revolved around

instructing them to use some form of the double-diamond model (adapted to their specific context).

The most important reflection for other students is this one. If you’re someone who is cultivating ideas with

passion that is not quite reciprocated in your immediate environment, I know how it feels. During parts of

my project, I felt at a loss of directed guidance as no one in my college shared a similar passion for HCI

whereas typical areas of graphic design and “UI/UX” were discussed all the time. However, every single

person I interacted with, even if they were not remotely interested in my field of study, contributed to my

project. Yes, you might not get exactly what you’re looking for but there’s something everybody can offer

using their own experiences, knowledge and passion. Knowledge exists around you in forms that you sometimes

hesitate to accept. Embracing this acceptance can make you feel not-so-lost. And hey, you have books and the

wonderful internet bringing you to a level playing field with everyone else in the world. So, don’t

hesitate. Ideate and make fearlessly, but with passion.

I acknowledge the efforts of my mentors: Prachi Mittal & Suman Bhandary; as well as my peers: Nikhil Shankar,

Pratishtha Purwar, Muskan Gupta, Alina Khatri, Atreyo Roy, Kriti Agarwal, Navya Baranwal, Harshvardhan

Srivastava in helping me shape the contents of my argument. The librarians, Natesh & Paramjit, were also

instrumental in helping me find suitable resources to write my paper.

I’d also like to acknowledge the sessions with Shyam Attreya during his final year at IIAD without which I

probably wouldn’t have had the starting thought in the first place.

The last bit of gratitude goes out to the efforts of Daniel Shiffman, the Processing team and Peter Abeles

for their respective contributions to open-source software and the propagation of related knowledge without

which all of this would have remained an untestable hypothesis.