distance responsive type

2023

custom software

Thought

A lot of graphic design outcomes, for me, remain trapped in a power structure between the designer & the viewer — the same 16-point sized paragraph is viewed from a range of distances, resulting in varying experiences. Go too far, or too near, and the paragraph will no longer be legible.

Image credits: Nvision.

In a world where experiences are largely becoming responsive — user flows react to user-inputted data, websites fit content based on the size of the viewport, and components of buildings react to the way they are being perceived (see Responsive Environments at the MIT Media Lab), I wondered whether typography on a digital screen could do the same.

While many perceptual factors come into action when attempting to comprehend a piece of a text on a screen, I decided to play around first with the simplest one — distance.

Logic

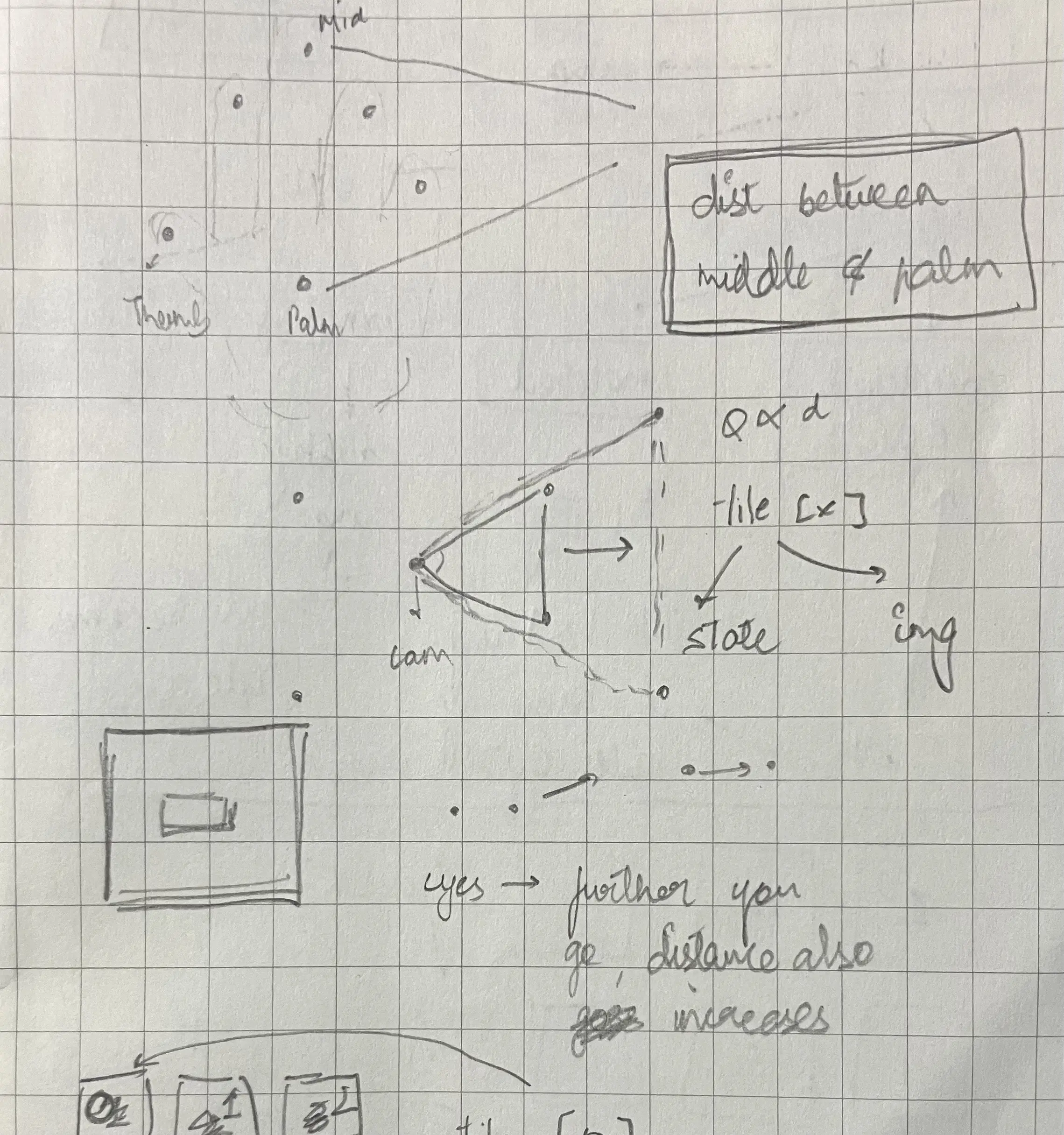

What’s interesting in terms of the logic of the program is that webcams cannot typically sense depth — how far has the eye moved from the camera? However, what it can do with the use of TensorFlow’s PoseNet is to continuously track the position of the eyeball on a 2D plane.

The logic used is then simple: the further the eyes go, the smaller the distance between the pupils, and the closer the eyes come, the greater the distance between the pupils.

The font-size was, therefore, tied into a relationship with the distance between the two pupils. However, to prevent the font-size from constantly changing every frame, I used the concept of breakpoints — go beyond a certain threshold and the font size changes.

//Assigning the distance between pupils to a variable

d = int(dist(pose.leftEye.x, pose.leftEye.y, pose.rightEye.x, pose.rightEye.y));

//Changing size of the font

function tChange() {

fontSize = lerp(fontSize, newFontSize, 0.05);

if (distance is less than minimum){

newFontSize = 56;

}

if (distance is more than minimum and less than maximum){

newFontSize = 16;

}

if (distance is more than maximum){

newFontSize = 8;

}

}

All the actual code can be found here.