aidan nelson showed this in hypercinema_class-5.

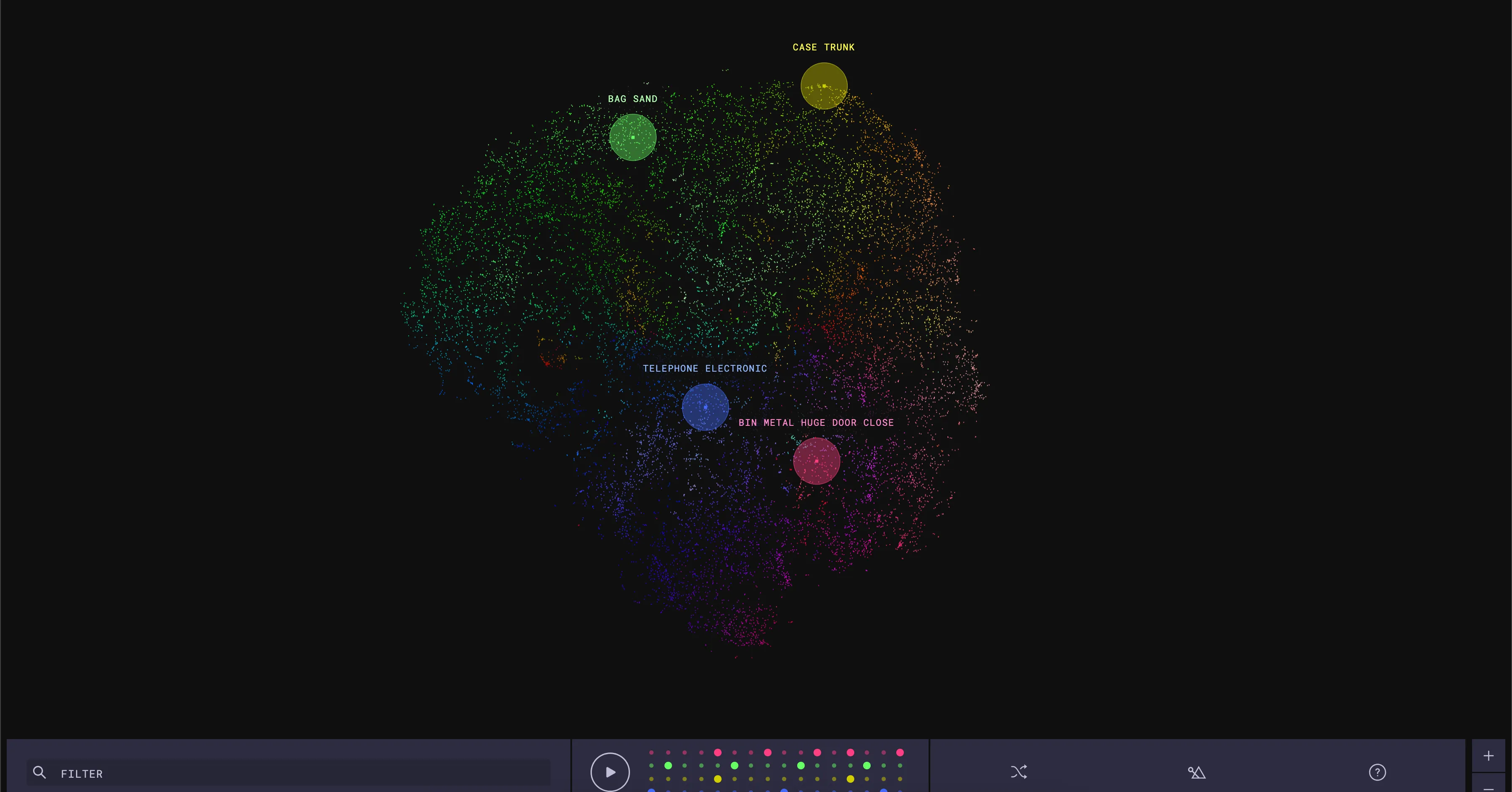

https://experiments.withgoogle.com/ai/drum-machine/view/

it’s a bunch of sounds that are analysed by a model and then placed based on their machine-assumed proximity. people can then select sounds and put it on a drum-sequencer.